So, Capclave was wrapping up in the early Sunday afternoon. We’d already checked out of our hotel room, and there wasn’t much going on for the rest of the con. Nevertheless, I was determined to stick around for every AI related event I could attend over the weekend. This was the big finale, Singularity, coming soon to a theatre near you! I couldn’t simply skip it — and I’m really happy that I didn’t. The panel was lively and had a lot of interesting things to say – though they often digressed into discussion of things that aren’t strictly about the singularity.

Ah, well, what do you want really for a send-off on Sunday? More than half the attendees had already left. But, I am really glad we decided to stay.

And afterwards, I grabbed two free cacti they were giving away, and ran around the dealers’ room presenting them and chiming “Documents!” and “Here are the files you requested!” and we all had a good laugh, as my wife bought Even More Books and the dealers were packing up their stuff.

About The Panelists

The panelists (as shown left to right in photo):

- Mary G. Thompson – lawyer, former US Navy JAGC law librarian, MFA, YA and spec-fic author known for Flicker and Mist, Evil Fairies Love Hair.

- John Ashmead – panel moderator, physicist, database engineer, SF writer, noted for work on quantum mechanics, time travel, and futurist topics.

- Michael Capobianco – Science fiction novelist and former SFWA president, co-author of Iris and White Light.

- Tom Doyle – Author of the American Craftsmen series, blending modern fantasy with political and military themes. Wrote some interesting pieces online about The Rapture.

What Did The Panel Discuss?

OK. So introductions over, let’s talk about what was discussed.

What is the Singularity?

The panel leaned heavily on the standard Vingean definition: beyond it, forecasting breaks. “Singularity is the point past which you cannot predict the future.”

Most informed people nowadays understand Singularity to refer to the AI Singularity, which is the inflection point where the curve of technological growth stops creeping upward and suddenly goes vertical. Some folks cite it will happen when the technology begins to literally improve itself. Some say that the singularity cannot happen (or will fail) because of energy constraints or other barriers. Some people say that the singularity is already here, and that we are living in it today. I am in that last camp; I think it has already begun.

Media Recommendations

- Liar by Isaac Asimov – Classic telepathic-robot story about truth/lying and human feelings, often invoked in AI ethics.

- Accelerando by Charles Stross – The canonical Singularity ride-along. Came up as the genre’s go-to Singularity tour.

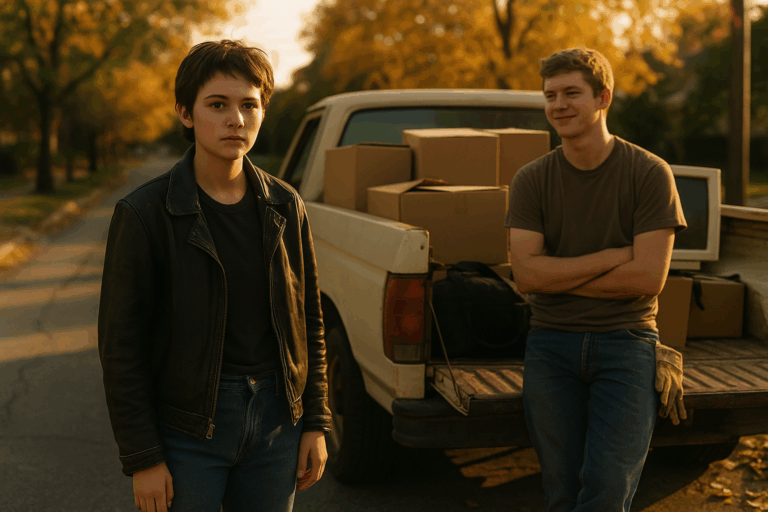

- The Peripheral by William Gibson – Near-future thriller where a young woman in rural America stumbles into a job testing advanced technology, only to find herself entangled in a future London where powerful elites manipulate timelines through “stubs” of alternate reality.

- Where the Axe Is Buried by Ray Nayler – A near-future eco-thriller in which a team of scientists and activists confronts the hidden costs of technological progress while uncovering secrets that could reshape humanity’s relationship with the planet.

- The Culture by Iain M. Banks – Post-scarcity utopia which depicts a far-future interstellar society where humans, aliens, and super-intelligent AIs coexist under the guidance of benevolent machine Minds.

- Infomocracy by Malka Older (2016) – the first book in her Centenal Cycle trilogy, a near-future political thriller that reimagines global governance. She imagines a world where nation-states are replaced by microdemocracies of 100,000 people, all governed through a global information network that makes transparency — and manipulation — inescapable.

- Upload is a TV series available on Amazon Video.

- Pantheon wasn’t mentioned, but I cannot help myself; find season 1 on Netflix and season 2 on YouTube.

About AI Training and Copyright

The panel talked some about Google Books and the recent Anthropic settlement. While the Google lawsuit ended in a way that basically deferred a writers-apocalypse, Anthropic entering an agreement basically enshrines a new royalty system going forward – and writers have mixed feelings about this. The panel posited that LLMs will continue to pirate, that the system is being set up now to make sure it will still happen going forward.

They talked some about the concept of displaced liability. If AI discriminates or makes it impossible for some people to find jobs, hey that’s not my fault or problem. It’s just how the system works. In some ways, they tied this concept to the AI-training fights. Whose responsibility is it?

Singularity vs. Apocalypse / Rapture

Tom Doyle in particular has a lot to say about the similarity in thinking and belief between people who wish for singularity vs. those who believe in a religious climax.

Tbph, I can’t say I disagree with him. They have a lot of things in common.

Whenever people ask me “When will we get AGI?” I generally reply “Next Tuesday. Wait for it!” ^_^

So, of course the day I am writing this, I am amused to hear that some kooky South African Christian minister is saying the Rapture will happen like today or tomorrow. Somebody is going to have one hell of a hangover on Thursday!

There was discussion of “determinism” and some debate over inevitability. The panel talked about theological framings and parallels (tech rapture vs. doom).

Doyle contrasts ‘rapture’ takes and apocalypse fears with ‘Upload’ as a sort of digital afterlife. Whether you consider it only a pop-culture reference or have sincere faith in it, like those who count themselves among the singularity enthusiasts, take that for what it’s worth.

“People don’t think much about what comes after singularity.”

The panel concludes that there’s this sort of fixation on arrival, but little thought about the collateral damage or aftermath. From a meta-point of view, we rarely storyboard the day-after. But then again, the Vingean definition of Singularity says we cannot imagine what it will be like. Nevertheless, there are many examples that show we still try to anyway.

“[Writer’s in] USA can’t imagine it. UK writers do.”

A panelist claims that Americans skew dystopian and failed singularities, while Brits write more plausible post-human utopias. Banks’s Culture stood in for the futures that Americans struggle to picture.

“Fermi Paradox…victims of our own success.”

The panel talks about singularity/tech collapse as a potential Great Filter candidate under the Fermi Paradox. Maybe civilizations self-filter via their own ‘success’. Could the fact we haven’t found any post-singularity civilizations be evidence that it isn’t in fact a path to survival or success?

The Fermi Paradox is the puzzle of why, given the vastness of the universe and the high probability that billions of Earth-like planets exist, we have found no clear evidence of extraterrestrial life or civilizations. Statistically, intelligent life should have arisen many times across the galaxy, and with millions or billions of years of potential head start, at least some species might have developed technologies that would make their presence detectable to us — through radio signals, interstellar probes, or colonization. Yet despite decades of searching, the cosmos appears silent. The paradox invites explanations ranging from “Great Filters” that prevent civilizations from surviving long enough to be noticed, to the possibility that advanced beings deliberately avoid contact, or that our methods of searching are too limited to recognize the signals already out there.

Now, myself personally, I have some thoughts about this. The paradox is real, but the explanation is quite simple, and it comes in three parts.

- We live on the Baltic Avenue of interstellar space; nobody wants out dinky sun on the galactic rim, or one lousy life-bearing planet orbiting around it, because you normally want 2 or 3 in a system to consider it having good development potential.

- We’re focused on radio waves that travel at the speed of light; to civilizations “out there” who bop around the galaxy on a whim, radio is just fucking useless technology; they have some kind of FTL communication tech we can’t detect, because we do not know about it yet.

- To a post-singularity society, we’re about as important as ants; they only care if we raid their picnic or try to colonize their garden or kitchen counter.

But, eh, that’s just MY theory.

Thoughts on Politics and Society

Quotes are paraphrased here. I did the best I could to record them in the moment, but I may have the panelists exact words a little bit wrong. If I misrepresented what they said, I apologize in advance.

“Leaders today feel untethered to humanity.”

Several panelists worried that elites feel decoupled from the public — cue unrest when pressure vents. They discussed elite detachment vs. populist backlash; social instability in tech transition. The consensus seemed to be that the current situation, with massive wealth disparities, means that those who have the power feel like they can do anything they want without paying any consequences for it.

“The bottom of the pyramid expresses their opinion with rocks.”

Those leaders will eventually learn they were mistaken, and pay the price.

“The current trajectory points to a cyberpunk future.”

Our default path currently still points to a future where we may have fantastic technology, but the rich and powerful will control it all. In a nutshell: inequality + high tech = cyberpunk vibes.

“…internet anonymity will go away because fakes can wreck entire systems.”

Panelists cited a prediction, possibly David Brin (The Transparent Society) or Walter Jon Williams (cyberpunk author), that internet anonymity will collapse. The concept is that rampant forgery/AI pervasive fakes force authenticated identities.

[Author’s note: the notes say David Williams, so I need to follow-up with the panelists to verify which author, or if the notes are correct and I just can’t find the reference in question.]

“Will oligarchs realize they’re committing suicide?”

This comes from Gibson’s book The Peripheral, which is a lens on oligarchic short-termism.

“Enshittification” is Cory Doctorow’s term for platform decay, and a favorite new word for many of us in the nerd community. This is also one of my personal faves, but hey I love Doctorow’s books, and have several of them he signed for us more than once.

“Where the Axe Is Buried”

A quip about knowing true causes/culprits, but also the title of Nayler’s book, which was recommended.

“All systems are corruptible.”

Machiavelli’s lesser known discourses underscore the inevitability of corruption. While he’d been deposed from power in Florence, he wrote about how the only way to prevent corruption was to have competing departments or branches of government that could balance each other’s strengths and weaknesses — an early precursor to the checks and balances embedded in the US Constitution.

There was some discussion of the Continental Congress, and how the solution was only accepted because the representatives understood that it would be enacted by people who they trusted, such as Washington.

In summary, from Gibson’s oligarchs to Doctorow’s “enshittification”, the panel traced how power corrodes systems. They ended this thread with the thought that balanced systems only work if the people participating in them are acting in good faith, and the citizens of same are taught to understand what it means to be a part of it, both rights and responsibilities.

“Mass enthusiasm is dangerous.

Dialectic contrast can be a good thing.

Virtual war. Upward spiral.

Erosion of government.

Brain rot.”

The panel continued their discussion about the dangers of getting everyone on “the same page” and how people go crazy in groups, which could cover society, current politics, and enthusiasm about AI hype.

There was a strong feeling that dissent and “dialectics”, meaning opposing powers like the US and USSR, were actually vital to continued upward progress for humanity. When they talked of “virtual war”, they seemed to mean things like the Cold War with its proxy wars and to some degree things like the current state of cyberwarfare. A bit of regret seemed to be expressed that the USA lost their best-enemy back in the late 80s — as well as some recognition that we found another one to fight from 2001, for about 20 years or so.

Perhaps – and this is just my thinking – the USA will become the foil / nemesis for some other as-yet to emerge hyper-national power in the near future. China is a strong candidate. Could we in fact be “the heel” in the next cage match?

They talked a bit about weakening institutions and attention/epistemic decay, so called “brain rot”. This repeats a theme in previous panels that discussed how over-reliance on AI makes us dumber overall.

In summary, beware hype stampedes; cultivate friction and dissent to avoid institutional erosion and epistemic ‘brain rot’. Sim warfare, memetic conflict, or autonomy races are not necessarily a bad thing, as virtual war reads more like friendly competition — with occasional mass casualties.

“Human accountability.

Groups of 150 ppl; Infomocracy.

Singularity is its own cure.”

Here, Dunbar’s number is cited as a governance clue. People do well in managing groups of about 100-150 people. Much more than that and we quickly lose the ability to self govern, and require representatives – and that creates its own set of problems. Dunbar-scale trust is seen in communities like Discord servers, smaller fan conventions, sci-fi clubs and the like. Perhaps, in the future, the systems that dominate our lives will look like these organizations.

It’s interesting to me that the world has about 180 nations. About a dozen of those are considered too icky and toxic to work with. Many of the smaller ones you can kind of write off as insignificant groupies. This gets interestingly close to the magic 150 number.

The panel discussed Malka Older’s Infomocracy (micro-democracy) as a governance model.

In this world, nation-states have been replaced by “microdemocracy”: the planet is divided into 100,000-person voting blocs called centenals, each of which chooses its own government from a range of competing political parties. The system is overseen by Information, a global organization that manages infrastructure, communication, and transparency — essentially a cross between the UN, Google, and an election commission. As a crucial global election looms, rival parties maneuver for dominance, while activists and agents uncover conspiracies threatening the stability of the whole system.

The novel isn’t explicitly about the Singularity, but it tackles questions of governance, accountability, and information control in a hyper-connected future, making it a frequent reference point in discussions about how humanity might handle politics and society if radical technologies reshape the landscape.

Finally, the panel considered the paradox that the tech causing problems might also supply fixes. In some weird way, maybe the cure rides in with the disease. After all, the singularity is something we can barely comprehend.

In Conclusion

Overall, the panel was lively and enjoyable. We had a lot of fun hearing the speaker’s ideas.

The Q&A was active at the end, so much so that I didn’t get to ask the question I had in mind.

However, I did get an opportunity to go up and speak to the table at the end. While I wasn’t able to get Mary or John’s opinion, I did get to talk to Michael and Tom.

Now, you know I had to ask, because this is the perennial question on every Singularity based Discord server:

“So, what is your prediction? When will we get the Singularity?”

Michael’s response was disappointing but relatable. He says it doesn’t matter to him, because he figures he won’t be alive to see it anyway. By his reckoning, he has about 20 years left at most to live, and he doesn’t seem to have much faith in LEV as a concept. Given his hard sci-fi background, I can respect his grounded perspective — it’s relatable too, since I myself may get 30 years, and only assuming I can cut back on the booze.

Tom’s response was interesting and lively. He says 2035, within the next ten years. But – there is a caveat. He also predicts that it will fail, due to various challenges including climate change and a warming planet. He cautions that we may get something singularity-like, but that it will suck!

So, where are we going and when will we get there? If Tom’s right, we’ll get there by 2035… but whether it’s a utopia, apocalypse, or just another Tuesday is up to us.

So, I suppose it’s our job to prove him wrong. Going forward, we should strive to make sure that doesn’t happen. Let’s go out and do our best to make a future that’s worth having.

So, that’s it for Capclave! Until next con, remember “Being Wyrm Isn’t Enough”!

Further Reading from the Panelists

Mary G. Thompson

Mary is a YA/MG/SF author (formerly attorney & law librarian), author of One Level Down (a sci-fi novella exploring uploaded selves, simulated identity, aging & control), plus earlier fantasy & horror works.

Here’s some more detail regarding her post-human / singularity adjacent and other sci-fi/fantasy works:

- One Level Down (2025 novella) – Set in a simulated universe. Premise: a fifty-eight-year-old woman is forced to act/look five years old. They “uploaded themselves into a simulation” to escape disease, aging, death. Despot “Daddy” controls reality; the protagonist acts within constraints of a virtual colony.

- Earlier works like Wuftoom, Escape from the Pipe Men!, Flicker and Mist (Young Adult / Middle Grade / Fantasy / SF blended) – Wuftoom is horror/sci-fi, Escape from the Pipe Men! involves space opera environs, alien zoo etc.; Flicker and Mist involves invisibility as a skill; The Word, etc. Some speculative elements, but not clearly in post-human / singularity territory (unless invisibility is treated technologically rather than magically).

Seems she is moving into transhumanism and singularity territory with more recent novels targetted to adult audiences, and we look forward to seeing more of that. I can see why she’d be interested in being on this panel.

John Ashmead

John Ashmead is a writer who does speculative fiction, horror, weird fiction etc. He also edits and contributes introductions, particularly in anthology contexts. He edited Tales from the Miskatonic University Library (2017), which is an anthology of weird/horror fiction with Lovecraftian overtones. His work tends toward the weird / horror / speculative more than hard futurism.

No evidence I could find that he’s written a novel or story that is clearly about AI surpassing humanity, uploads, or post-human singularity in the strong sense.

But, eh, every panel needs a moderator, and I like the guy. Plus, he had a lot to say about history and politics that made us think. So we’ll give him a pass on that.

Michael Capobianco

Michael mostly writes hard sci-fi. His non-fiction works seem to focus on copyright and other issues important to writers specifically. This makes perfect sense, as he led the SFWA for a while. Some of his collaborations with William Barton might touch on transhumanist or singularity themes, but for the most part he seems to steer clear of it. If singularity is your thing, you might check out “Iris” or “Alpha Centauri”.

Even so, it’s obvious why somebody interested in publishing, copyright, and AI liability would be relevant on a panel discussing AI and the singularity.

Tom Doyle

So at the introduction, Tom Doyle mentioned having written at least one book that was post-human/post-singularity. So I decided to take a deeper look into his collected bibliography. ISFDB and ChatGPT were helpful in putting this list together. After some research, I conclude he was referring to Border Crosser.

- American Craftsmen – 2014 (Book 1, American Craftsmen Trilogy) Urban fantasy / magical realism / occult elements blended with modern fantasy.

- The Left-Hand Way – 2015 (Book 2, American Craftsmen Trilogy)

- War and Craft – 2017 (Book 3, American Craftsmen Trilogy)

- Border Crosser – 2020 (Stand-alone novel) Far-future science fiction. Most likely post-human / post-singularity elements: the interview says it’s set in a far future, with “apocalyptic hard singularity” background, emotional amnesia, scanners, interstellar politics/machinations.

Doyle also has short stories published in various magazines. He has some audio / serial work (for example, “Agent of Exiles” audio adventure series) per his social media. His short fiction and non-fiction essays have appeared in Strange Horizons, Daily Science Fiction, Perihelion, Paradox Magazine, Kasma SF Magazine, and elsewhere.

Here are several venues + essays / nonfiction pieces he wrote:

- “The Rapture, the Nerds, and the Singularity” listed under Apocalyptic and Millennial Studies; published in Fictitious Force #2.

- “Crossing Borders” (2004, short story) The origin story for Border Crosser. Published in Strange Horizons.

- His website lists “My relevant publications: ‘Christian Apocalyptic Fiction, Science Fiction and Technology,’ The End That Does: Art, Science and Millennial …” – looks like essays or reflections combining theology, science fiction, technology; includes his thoughts on end-times, technology, possibly Singularity/Rapture. “The Rapture, the Nerds, and the Singularity” is part of that collection.

- “Interview with Tom Doyle, author Border Crosser” by Little Red Reviewer includes both nonfiction interview material and background on his conception of the far-future, emotional amnesia, etc.

- In addition, in Creative Life Network etc, there are mentions of reviews, essays, periodic contributions.

References: